I would like to share with everyone how we can build a friend recommendation by using some of the capabilities of MaxCompute,

Alibaba Cloud's Big Data computing platform, and finally provide a

demonstration of a social friend recommendation system. Big Data is a

hot topic right now, and if we want to use this data, then we need to

consider three elements.

The first element is massive data. The more data we have, the better. Only when we have enough data can we tap into the hidden potential of that data. The second is the ability to process data. We need to have the ability to quickly process the data we have to find the meaning hidden within it. The third element is commercialization. When we collect data, while more is better, that is not the only important factor. It also has to apply to a specific scenario. In this article, we will take a recommendation system as an example to see how Big Data helps in improving these recommendations.

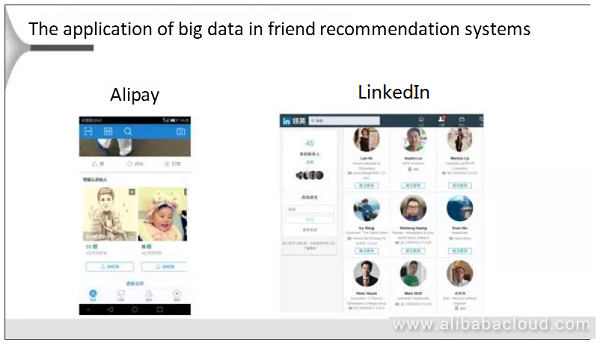

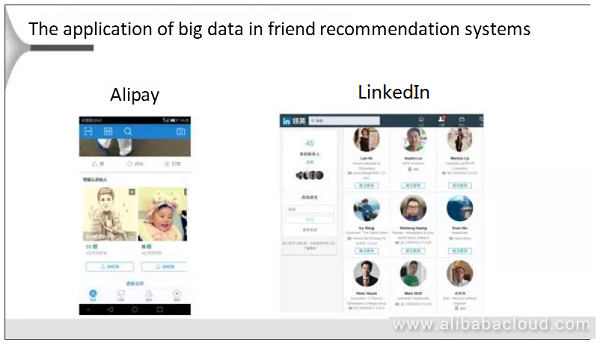

On the left, we have Alipay's friend recommendation system, which is similar to what you get on other social media platforms such as LinkedIn. When we open up Alipay, there is a column of recommendations below showing you people who may be your friends. Generally, the people in this list are all people that you know and may not have added yet. On the right, we can see LinkedIn, which is a social networking job search site. LinkedIn also provides such recommendations. It shows you users who are potentially your friends and tells you how many degrees of separation are between you and that user, up to three degrees. LinkedIn displays highly relevant suggestions directly in front of the user, and those that are potentially less relevant, it places towards the back. Both are typical friend recommendations.

So how do you recommend a friend to a user? The relationship between the two users is initially non-friend, then we have to determine the common friends between the two. This way we can give the user a recommendation. For example, if the two users have several common friends, then we assume that the two users are likely friends, and that's mostly how the process works.

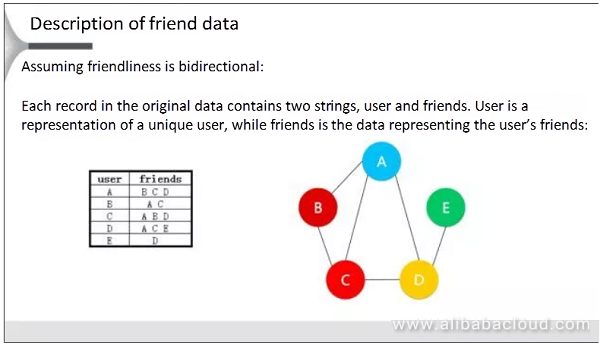

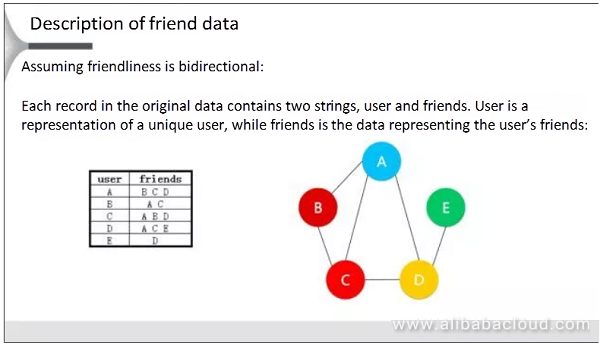

The right side of the picture is a social relationship service between people. For example, A and B are friends. We can use five methods to illustrate that to have a machine analyze this data; we need to convert the right side of this social relationship to data that a machine could recognize. This is similar to binary representation on the left-hand side. For example, A is friends with B, C, and D, so on the left side, A, B, C, and D have a friends relationship.

This way we can pass this information to the machine for analysis. For example, once we analyze the data, we may find that A and E have a common friend, and B and D have two friends in common, while C and E have one friend in common. Now, we can recommend B and D as potential friends, and place this recommendation in front. Also, we will put A and E or C and E towards the back. That is to say, that potential friends with several friends in common get placed towards the front, while potential friends with fewer friends in common get placed towards the back. At least, this is what we want as a result.

Here, we are taking friend recommendation as the example.

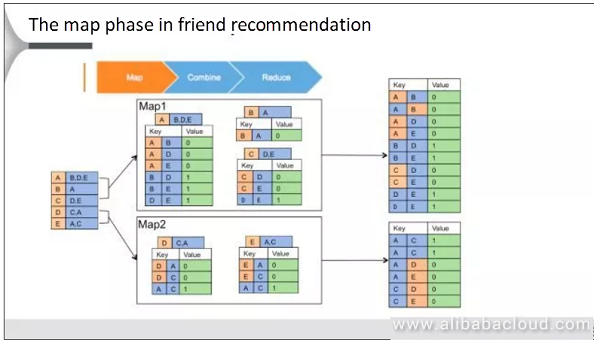

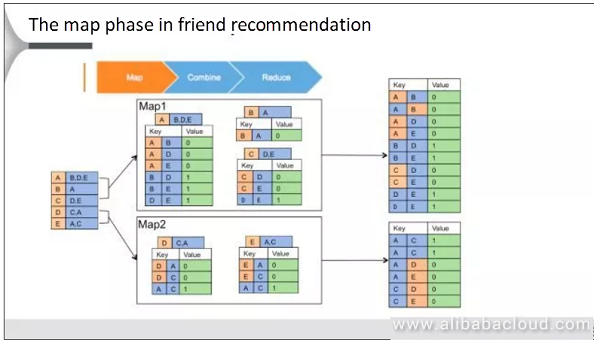

Fist input the data on the left that the machine can recognize. After the input, first split the data on the Map end and split it into two different datasets. When splitting, convert it to a key, value data type.

For example, what would the A, B, D, and E lines of data convert to? The value for A and B would be 0, indicating that the two are already friends. If the two are not friends, then we need to customize this line of data so that the value is treated as 1. The values of B and E, and D and E below are also 1. Convert the original row of data into a key - value data type as the data on the right similar to the above and below examples. This is what we do with Map. We split the data based on two key, values and convert the data into a key, value data type.

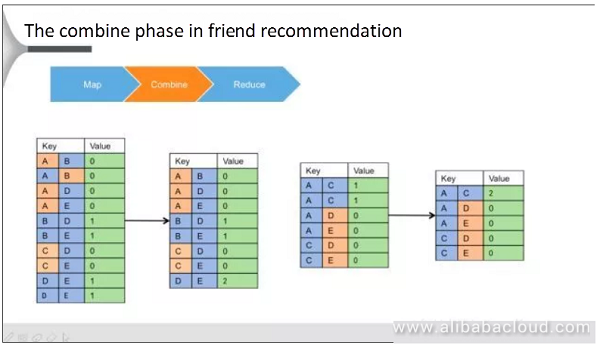

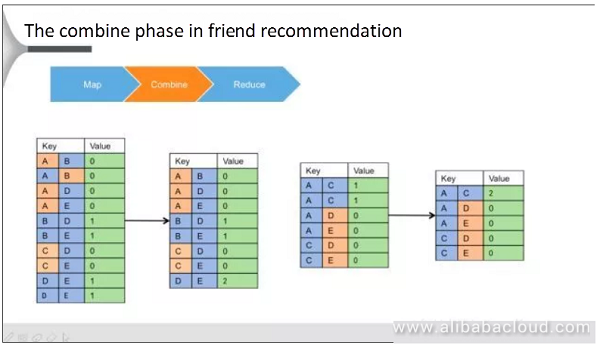

The combine operation involves first performing a summary of the local data where we see which of the data is redundant. For example, if the value of A and B is 0, and the value of B and A is also 0; the same relationship is represented twice. Hence, we need to save only one piece of data. Similarly, I have used this method to summarize the data locally, as shown in this image with these two data.

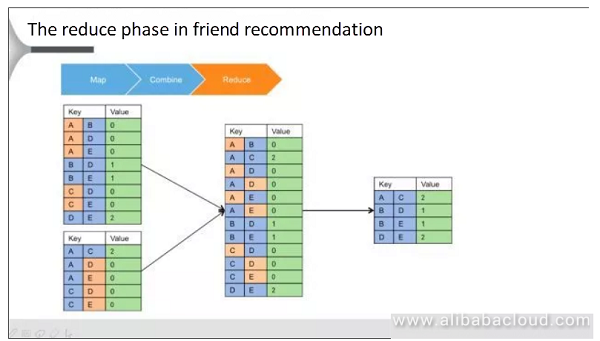

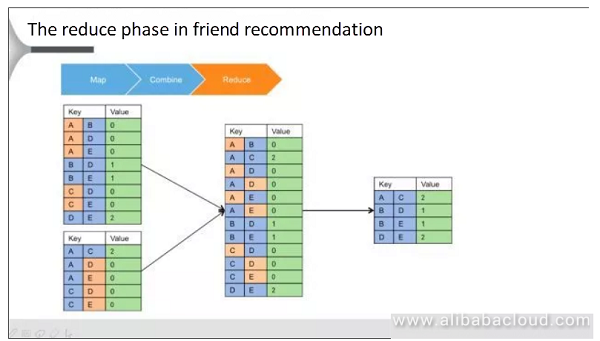

The third step is the Reduce phase. The goal of Reduce is to summarize this data, that is to summarize the data on both side together, then summarize the unique value corresponding to each key. This is the final computation result. If the two users are already friends and the value is zero, then there is no need to make the recommendation. Therefore, if A and B are zero, then they will be deleted. We only need to know that the value is greater than zero and there are potential friends. At the same time, the two individuals are still not friends, so we have achieved the desired result.

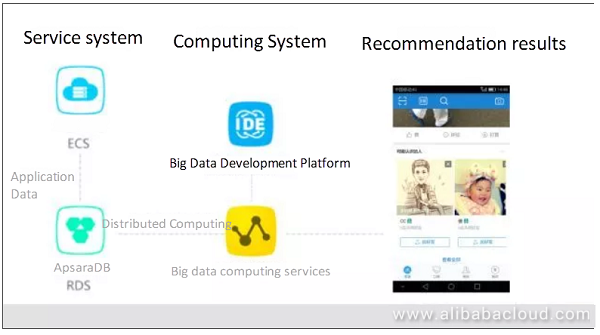

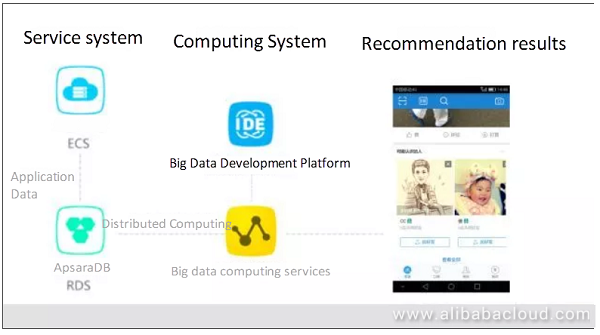

How do we build the architecture of a friend recommendation system on Alibaba Cloud? For example, there is social software that is also a business system. The front end uses Alibaba Cloud's cloud server ECS to deploy the entire social software application. We can save the data input to Alibaba Cloud's ApsaraDB for RDS database service. This is the current social networking application system. The business system produces a data entry; so we have to know how to analyze the data.

First, we need to extract the data from the database to Alibaba Cloud's big computation service MaxCompute. This is very similar to our traditional ETL digital warehouse. The process uses Alibaba Cloud's Big Data development platform to analyze and process the data.

Using this, we can quickly and easily develop processes such as data seeding or data generation. This employs a Big Data development platform and Big Data manufacturing. The result is a data analysis result, but it also requires the front-end application to analyze and display the results.

MaxCompute can call the periodic task according to the set workflow. MaxCompute is also useful for machine learning because machine learning can also use the data analyzed by MaxCompute. If other similar services perform data analysis, then you can place the result on a machine learning platform, where the machine calculates a model to learn the data and produce the model you want.

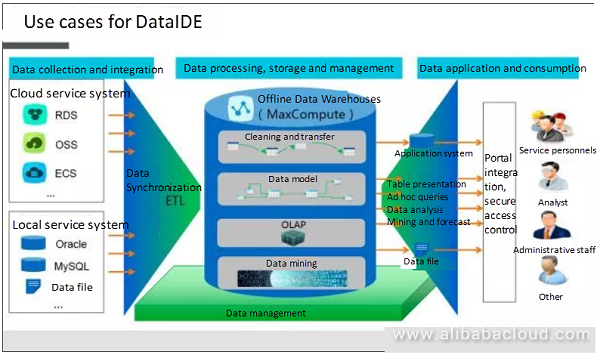

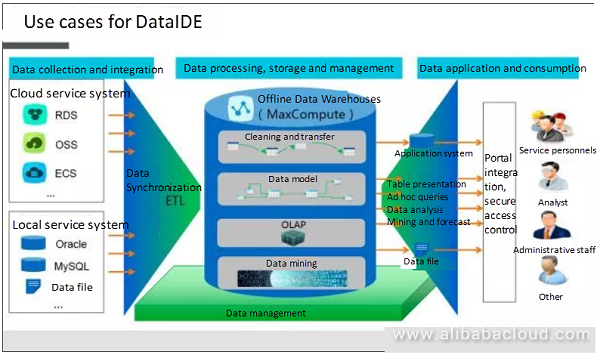

The image above represents a use case of the entire DataWorks application. When we perform data analysis, first, we need to integrate the original data, which we can accomplish in DataWorks. We have to take all of the information for the original data and save it on MaxCompute. We can also use DataWorks for post processing, saving, and other operations. DataWorks can handle data storage, analysis, processing, clustering, etc. during the entire data analysis process.

The next step is simple testing. Testing after development allows us to ensure that the code is working correctly. The input here is test data. An output data type is a form with three columns, the first is User A, the second is User B, and the third is the number of common friends between them. We only need to take note of the first three, and then perform testing.

Next, we need to run the data code locally. The result of this run is the local development test. When testing locally, there is data here. Your first step is to choose which project to process. The second step is to select the input and output tables. You also need to specify the output table, the goal of the output table, and save the output results inside. Once the configuration is set, click run and get the result.

After a successful local development test, we need to pack it into a JAR package, then upload it to Alibaba Cloud, that is to the MaxCompute cluster. Once the second has been packed into a JAR, add the resources. Below, we take the JAR and use resource management to upload the JAR. This concludes the upload of locally developed and tested MR package to the MaxCompute cluster.

Once you have uploaded it, you can use it to create a new task, then give the task a name and indicate to which JAR the task correlates. Next is OPENBMR. As we have selected the MR process, so we have to select the OPENMR module inside. To generate this kind of task, we go to the edit page and then pass instructions that when it receives an OPENMR task, it needs to use the friend recommender JAR, and at the bottom instruct it to use the program logic in the JAR. Once these settings are complete, we can click run to get the results. This is our local development test. We upload the resource to the MaxCompute cluster, then use the JAR we developed locally on the cluster. This is the entire development and deployment process.

Read similar articles and learn more about Alibaba Cloud's products and solutions at www.alibabacloud.com/blog.

Sumber : https://www.alibabacloud.com/blog/building-a-social-recommendation-system-based-on-big-data_593980?utm_content=m_1000017072

The first element is massive data. The more data we have, the better. Only when we have enough data can we tap into the hidden potential of that data. The second is the ability to process data. We need to have the ability to quickly process the data we have to find the meaning hidden within it. The third element is commercialization. When we collect data, while more is better, that is not the only important factor. It also has to apply to a specific scenario. In this article, we will take a recommendation system as an example to see how Big Data helps in improving these recommendations.

On the left, we have Alipay's friend recommendation system, which is similar to what you get on other social media platforms such as LinkedIn. When we open up Alipay, there is a column of recommendations below showing you people who may be your friends. Generally, the people in this list are all people that you know and may not have added yet. On the right, we can see LinkedIn, which is a social networking job search site. LinkedIn also provides such recommendations. It shows you users who are potentially your friends and tells you how many degrees of separation are between you and that user, up to three degrees. LinkedIn displays highly relevant suggestions directly in front of the user, and those that are potentially less relevant, it places towards the back. Both are typical friend recommendations.

So how do you recommend a friend to a user? The relationship between the two users is initially non-friend, then we have to determine the common friends between the two. This way we can give the user a recommendation. For example, if the two users have several common friends, then we assume that the two users are likely friends, and that's mostly how the process works.

The right side of the picture is a social relationship service between people. For example, A and B are friends. We can use five methods to illustrate that to have a machine analyze this data; we need to convert the right side of this social relationship to data that a machine could recognize. This is similar to binary representation on the left-hand side. For example, A is friends with B, C, and D, so on the left side, A, B, C, and D have a friends relationship.

This way we can pass this information to the machine for analysis. For example, once we analyze the data, we may find that A and E have a common friend, and B and D have two friends in common, while C and E have one friend in common. Now, we can recommend B and D as potential friends, and place this recommendation in front. Also, we will put A and E or C and E towards the back. That is to say, that potential friends with several friends in common get placed towards the front, while potential friends with fewer friends in common get placed towards the back. At least, this is what we want as a result.

Analysis Model for Friend Recommendation Systems

How can we compute such a thing? What method can we typically use? We can use a computational model like MapReduce. MapReduce is a programming model used for parallel computing of large-scale datasets. It consists of three parts: Map, Combine, and Reduce.Here, we are taking friend recommendation as the example.

Fist input the data on the left that the machine can recognize. After the input, first split the data on the Map end and split it into two different datasets. When splitting, convert it to a key, value data type.

For example, what would the A, B, D, and E lines of data convert to? The value for A and B would be 0, indicating that the two are already friends. If the two are not friends, then we need to customize this line of data so that the value is treated as 1. The values of B and E, and D and E below are also 1. Convert the original row of data into a key - value data type as the data on the right similar to the above and below examples. This is what we do with Map. We split the data based on two key, values and convert the data into a key, value data type.

The combine operation involves first performing a summary of the local data where we see which of the data is redundant. For example, if the value of A and B is 0, and the value of B and A is also 0; the same relationship is represented twice. Hence, we need to save only one piece of data. Similarly, I have used this method to summarize the data locally, as shown in this image with these two data.

The third step is the Reduce phase. The goal of Reduce is to summarize this data, that is to summarize the data on both side together, then summarize the unique value corresponding to each key. This is the final computation result. If the two users are already friends and the value is zero, then there is no need to make the recommendation. Therefore, if A and B are zero, then they will be deleted. We only need to know that the value is greater than zero and there are potential friends. At the same time, the two individuals are still not friends, so we have achieved the desired result.

Friend Recommendation Systems on Alibaba Cloud

How do we build the architecture of a friend recommendation system on Alibaba Cloud? For example, there is social software that is also a business system. The front end uses Alibaba Cloud's cloud server ECS to deploy the entire social software application. We can save the data input to Alibaba Cloud's ApsaraDB for RDS database service. This is the current social networking application system. The business system produces a data entry; so we have to know how to analyze the data.

First, we need to extract the data from the database to Alibaba Cloud's big computation service MaxCompute. This is very similar to our traditional ETL digital warehouse. The process uses Alibaba Cloud's Big Data development platform to analyze and process the data.

Using this, we can quickly and easily develop processes such as data seeding or data generation. This employs a Big Data development platform and Big Data manufacturing. The result is a data analysis result, but it also requires the front-end application to analyze and display the results.

Technical Features of MaxCompute

The main technical points to MaxCompute are as follows:- Distributed: distributed cluster, cross-cluster technology, and flexible scalability.

- Secure: from a security standpoint, it features automatic storage error correction, sandbox mechanism, and multiple backups.

- Easy to use: it has a standard API, full SQL support, upload and download tools.

- Permissions control: multi-tenant management, user rights policy, data access policy.

Use Cases of MaxCompute

You can use MaxCompute to build your data warehouse. At the same time, MaxCompute can also provide a kind of distributed application system. For example, you can use map calculations, or an effective wide format to create an appropriate workflow. As data analysis does not involve just analyzing data once; instead, the process is cyclic. For daily analysis of data, you can create a workflow for such a task in MaxCompute, then set a periodic schedule that runs the task once every day.MaxCompute can call the periodic task according to the set workflow. MaxCompute is also useful for machine learning because machine learning can also use the data analyzed by MaxCompute. If other similar services perform data analysis, then you can place the result on a machine learning platform, where the machine calculates a model to learn the data and produce the model you want.

Alibaba Cloud DataWorks

Another addition to MaxCompute is Alibaba Cloud's Big Data development environment, DataWorks (formerly DataIDE). DataWorks provides an efficient and secure offline data development environment. DataWorks is only a development of the data task workflow, while MaxCompute handles the underlying data processing and data analysis tasks. In other words, DataWorks is an image of the data development service, which helps us in making better use of MaxCompute. Moreover, we can develop in DataWorks and do not need to develop right in MaxCompute. The results of developing in MaxCompute are incomparable to those of developing in DataWorks.

The image above represents a use case of the entire DataWorks application. When we perform data analysis, first, we need to integrate the original data, which we can accomplish in DataWorks. We have to take all of the information for the original data and save it on MaxCompute. We can also use DataWorks for post processing, saving, and other operations. DataWorks can handle data storage, analysis, processing, clustering, etc. during the entire data analysis process.

MaxCompute Application Development Process

The MaxCompute application development process is made up following six steps:- Install a configuration environment

- Develop an MR program

- Test scripts in local mode

- Import JAR packages

- Upload to the MaxCompute project space

- Use MR on MaxCompute

The next step is simple testing. Testing after development allows us to ensure that the code is working correctly. The input here is test data. An output data type is a form with three columns, the first is User A, the second is User B, and the third is the number of common friends between them. We only need to take note of the first three, and then perform testing.

Next, we need to run the data code locally. The result of this run is the local development test. When testing locally, there is data here. Your first step is to choose which project to process. The second step is to select the input and output tables. You also need to specify the output table, the goal of the output table, and save the output results inside. Once the configuration is set, click run and get the result.

After a successful local development test, we need to pack it into a JAR package, then upload it to Alibaba Cloud, that is to the MaxCompute cluster. Once the second has been packed into a JAR, add the resources. Below, we take the JAR and use resource management to upload the JAR. This concludes the upload of locally developed and tested MR package to the MaxCompute cluster.

Once you have uploaded it, you can use it to create a new task, then give the task a name and indicate to which JAR the task correlates. Next is OPENBMR. As we have selected the MR process, so we have to select the OPENMR module inside. To generate this kind of task, we go to the edit page and then pass instructions that when it receives an OPENMR task, it needs to use the friend recommender JAR, and at the bottom instruct it to use the program logic in the JAR. Once these settings are complete, we can click run to get the results. This is our local development test. We upload the resource to the MaxCompute cluster, then use the JAR we developed locally on the cluster. This is the entire development and deployment process.

Read similar articles and learn more about Alibaba Cloud's products and solutions at www.alibabacloud.com/blog.

Sumber : https://www.alibabacloud.com/blog/building-a-social-recommendation-system-based-on-big-data_593980?utm_content=m_1000017072

Tidak ada komentar:

Posting Komentar